ISL_Chapter9_Support Vector Machines

Written on March 7th, 2018 by hyeju.kim

9.1 Maximal Margin Classifier

9.1.1 What Is a Hyperplane?

- in a p-dimensional space, a hyperplane is a flat affine subspace of dimension p-1

ex) in two dimensions, a hyperplane is a line $\beta_0 + \beta_1X_1 + \beta_2X_2 = 0$

- divide p-dimensional space into two halves(according to >0 or <0)

9.1.2 Classification Using a Separating Hyperplane

-

a test observation is assigned a class depending on which side of the hyperplane it is located

-

magnitude of $f(x^) = \beta_0 + \beta_1x_1^ + \beta_2x_2^* + … +\beta_px_p^*$

- if far from xero, confident

9.1.3 The Maximal Margin Classifier

- seperating hyperplane for which the margin is largest- that is, it is the hyperplane that has the farthest minimum distance to the training observations.

- margin : the perpendicular distance from each training observation to a given seperating hyperplane; the smallest such distance is the minimal distance from the observations to the hyperplane, and is known as the margin

- can lead to overfitting when p is large

- if $\beta_0, \beta_1, … , \beta_p$ are the coefficients of the maximal margin hyperplane, then the maximal margin classifier classifies the test observation $x^$ based on the sign of $f(x^)$

- support vector : they “support” the maximal margin hyperplane in the sense that if these points wer moved slightly then the maximal margin hyperplane would move as well.

9.1.4 Construction of the Maximal Margin Classifier

- optimization?

-

- (9.11) : each observation will be on the correct side of the hyperplane, provided that M is positive

- (9.10) : with this constraints the perpendicular distance from the ith observation to the hyperplane is given by $y_i(\beta_0 + \beta_1x_{i1} + \beta_2x_{i2} + … +\beta_px_{ip}$

- (9.10)&(9.11): each observation is on the correct side of the hyperplane and at leasta a distance M from the hyperplane

- M : the margin of our hyperplane

- (9.9) : the optimization problem chooses $\beta_0, \beta_1, … , \beta_p$ to maximize M

9.1.5 The Non-separable Case

- problem arises when if a separating hyperplane exists

- find a hyperplane that almost separtes the classes, using soft margin = support vector classifier

9.2 Support Vector Classifiers

9.2.1 Overview of the Support Vector Classifier

- why support vector classifier?

- greater robustness to individual observations

- better classification of most of the training observations

- allow some observations to be on the incorrect side of the margin, or even the incorrect side of the hyperplane(The margin is soft because it can be violated by some of the training observations)

9.2.2 Details of the Support Vector Classifier

-

-

(9.14) : $\epsilon_1,…,\epsilon_n$ are slack variables that allow individual observations to be on the wrong side of the margin or the hyperplane. slack variable $\epsilon_i$ tells us where the $i$th observation is located, relative to the hyperplane and relative to the margin.

- if $\epsilon_i = 0$, then the $i$th observation is on the correct side of the margin

- if $\epsilon_i > 0$, then the $i$th observation is on the wrong side of the margin

- if $\epsilon_i > 1$, then the $i$th observation is on the wrong side of the hyperplane

?? slack variable research needed

-

(9.14): the tuning parameter $C$ determines the number and severity of the violations to the margin(and to the hyperplane) that we will tolerate = a budget for the amount that the margin can be violated by the n observations

- if $C=0$ , simply amounts to the maximal margin hyperplane optimization

- if $C>0$, no more than $C$ observations can be on the wrong side of the hyperplane

- the budget $C$ increases, we become more tolerant of violations to the margin, and so the margin will widen

- $C$ chosen by cross-validation

- bias-variance trade-off :

- if C is small, low bias and high variance

- if C is large, high bias and low variance

-

(9.12)-(9.15): only obervations that either lie on the margin or that violate the margin (support vectors)will affect the hyperplane, and hence the classifier obtatined. = an observation that lies strictly on the correct side of the margin does not affect the support vector lcassifier

- if $C$ is large, margin is wide, so many support vectors $\to$ low variance, high bias

- if $C$is samll, fewer support vectors $\to$ low bias, high variance

- decision rule is only based on the support vectors means that it is quite robust to the behavior of observations that are far away from the hyperplane.

-

9.3 Support Vector Machines

- non-linear boundaries

9.3.1 Classification with Non-linear Decision Boundaries

- enlarging the feature space using quadratic, cubic, and even higher-order polynomial functions of the predictors

- ex) fit a support vector classifier using 2p features( $X_1,X_1^2,X_2,X_2^2, … , X_p, X_p^2$)

- Then (9.12)–(9.15) would become

- ??? But in the original feature space, the decision boundary is of the form q(x) = 0, where q is a quadratic polynomial, and its solutions are generally non-linear

- other functions of the predictors can be used to enlarge the feature space, but be careful of a huge number of features

9.3.2 The Support Vector Machine

- an extension of the support vector classifier that results from enlarging the feature space in a specific way, using kernels.

- ??? However, it turns out that the solution to the support vector classifier problem (9.12)–(9.15) involves only the inner products of the observations (as opposed to the observations themselves). The inner product of two r-vectors a and b is defined as

-

-

http://ifyouwanna.tistory.com/entry/%EB%82%B4%EC%A0%81%EC%9D%98-%ED%99%9C%EC%9A%A9

-

$K(x_i, x_{i^`})$

-

kernel : a generalizaiton of the inner product, where K is some function that we will refer to as a kernel

-

a function that quantifies the similarity of two observations.

-

a linear kernel (Pearson (standard) correlation):

-

-

polynomial kernel(flexible. non-linear):

- d:positive integer

-

-

radial kernel(non-linear):

-

$\gamma$ : positive constant

-

if a given test observation $x^* = (x^_1 … x^p)^T$ is far from a training observation $x_i $in terms of Euclidean distance, then $\sum{j=1}^p(x^_j - x_{ij})^2$ will be large, and so (9.24) will be very tiny. This means that in (9.23), $x_i$ will play virtually no role in $f(x^)$. training observations that are far from $x^$ will play essentially no role in the predicted class label for $x^$

-

the radial kernel has very local behavior, in the sense that only nearby training observations have an effect on the class label of a test observation.

-

-

-

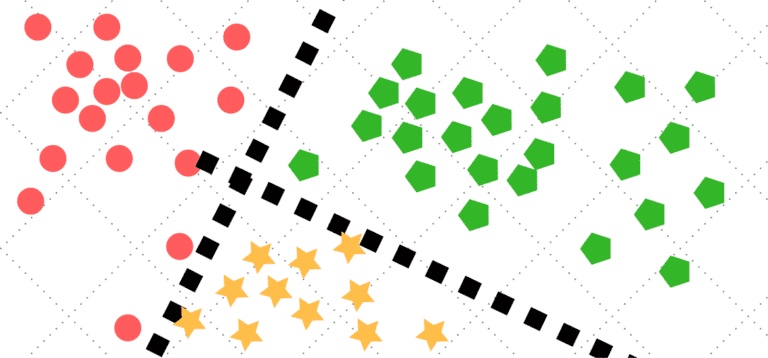

left - polynomial // right -radial

-

-

SVM

- When the support vector classifier is combined with a non-linear kernel such as (9.22), the resulting classifier is known as a support vector machine.

-

9.3.2 An Application to the Heart Disease Data

??roc curve is obtained by forming these predictions and computing the false postiive and true postive rates for a range of values of t

left - SVM polynomial kernel of degree d=1 // LDA : both perform well

right - SVM using a radial kernel. training set : $\gamma $ increases, overfitting, training error rate down($\gamma = 10^{-1}$)

but test set ($\gamma = 10^{-2} or \gamma = 10^{-3}$) better than ($\gamma = 10^{-1}$)

9.4 SVMs with More than Two Classes

9.4.1 One-Versus-One Classification

K classes : ${K}\choose{2}$ SVMs compare a pair of classes. For example, one such SVM might compare the $k$th class, coded as +1, to the $k^`$ class, codede as -1. The final classification is performed by assigning the test observation to the class to which it was most frequently assigned in these ${K}\choose{2}$ pairwise classifications

9.4.2 One-Versus-All Classification

K classes : $K$ SVMs compare one of the $K$ classes to the ramiaing $K-1$ classes. We assign the observation to the class for which $\beta_{0k} + \beta_{1k}x_1^* + \beta_{2k}x_2^* + … + \beta_{pk}x_p^*$ is the largest, as this amounts to a high level of confidence that the tesst observation belongs to the $k$th class rather than to any of the other classes.

9.5 Relationship to Logistic Regression

rewrite (9.12)-(9.15) as

-

$\lambda$ is a nonnegative tuning parameter

- when $\lambda$ is large then $\beta_1,..,\beta_p$ are small, more violations to the margin are tolerated, and a low-variance but high-bias classifier will result

- when $\lambda$ is small, then few violations to the margin will occur; a high-variance but low-bias classifier

- small value of $\lambda$ amounts to a small value of $C$ IN (9.15)

- $\lambda\sum_{j=1}^p \beta_j^2$ term in (9.25) is the ridge penalty term, and plays a similar role in controlling the bias-variance trade-off for the support vector classifier

-

(9.25) takes the “Loss + Penalty”

-

-

ridge regression and the lasso both take this form with

with $P(\beta) = \sum_{j=1}^p\beta^2j$ for ridge regression and $P(\beta) = \sum{j=1}^p\mid\beta\mid$ for the lasso

-

in the case of(9.25) loss function is

-

hinge loss

- closely related to the loss fnciton used in logistic regression

-

only support v ectors play a role in the classifier obtatined; observations on the correct side of the margin do not affect it

-

observations that are the correct side of the margin : $y_i(\beta_0 + \beta_1x_{i1}+…+\beta_px_{ip}) ≥ 1$ then loss function 0 , but for logistic regression, just very small for observations that are far from the decsion boundary

-

with this hinge loss function, the margin corresponds to the value one, and the width of the margin is determined by $\sum\beta_j^2$

-

-

when classes are well seperated, SVMs are preferred; in more overlapping regimes, logistic regression is often preferred

-

for historical regions, the use of non-linear kernels is much more widespread in the context of SVMs than in the context of logistic regression or other methods

-