ISL_Chapter8_Tree-Based Methods

Written on February 28th, 2018 by hyeju.kim

Chapter 8. Tree-Based Methods

8.1 The Basics of Decision Trees

8.1.1 Regression Trees

-

can know the important factor(Years > Hits)

Prediction via Stratification of the Feature Space

- We divide the predictor space—that is, the set of possible values for X1,X2, . . .,Xp—into J distinct and non-overlapping regions,R1,R2, . . . , RJ .

- the goal is to find boxes R1, . . . , RJ that minimize the RSS, given by

- For every observation that falls into the region Rj, we make the same prediction, which is simply the mean of the response values for the training observations in Rj .

-

top-down, greedy approach

- top-down : begins at the top of tree(at which point all observations belong to a single region) and then successively splits the predictor space

- greedy : at each step of the tree-building process, the best split is made at that particular step, rather than looking ahead and picking a split that will lead to a better tree in some future step.

Tree Pruning

-

instead of building the tree only so long as the decrease in the RSS due to each split exceeds some(high) threshold, first to grow a large tree $T_0$, and then prune it back in order to obtain a subtree $\to$ to select a subtree that leads to the lowest test errror rate $\to$ Cost complexity pruing(weakest link pruning): rather than considering every single subtree, considering a sequence of trees indexed by a nonegative tuning parameter $\alpha$

- parameter $\alpha$ controls a trade-off between the subtree’s complexity and it’s fit to the training data. $\to$ cross-validation

- $\alpha = 0$, equals $T_0$

- $\alpha$ increases, a price to pay for having a tree with many terminal nodes, and so the quantity (8.4) will tend to be minimized for a smaller subtree.

- parameter $\alpha$ controls a trade-off between the subtree’s complexity and it’s fit to the training data. $\to$ cross-validation

8.1.2 Classification Trees

-

predict that each observation belongs to the most commonly occurring class of training observations in the region to which it belongs.

-

classification error rate : the fraction of the training observations in that region that do not belong to the most common class

- not sufficiently sensitive for tree-growing

-

Gini Index :

###$G = \sum_{k=1}^{K}\hat{p}{mk}(1-\hat{p}{mk}) $ (8.6)

-

a measure of total variance across the K classes.

-

smaller value indicates purity

-

-

Cross Entropy:

- smaller value indicates purity

###$D = - \sum_{k=1}^{K}\hat{p}{mk}\log\hat{p}{mk} $ (8.7)

-

Gini Index or Cross Entropy are used to evaluate the quality of a particular split, and used when pruning the tree, but classification error rate is preferable if prediction accuracy of the final pruned tree is the goal

8.1.3 Trees Versus Linear Models

- Trees or Linear Models?

- the true decision boundary is linear $\to$ linear models

- complex and non-linear relationship between X and Y $\to$ trees

8.1.4 Advantages and Disadvantages of Trees

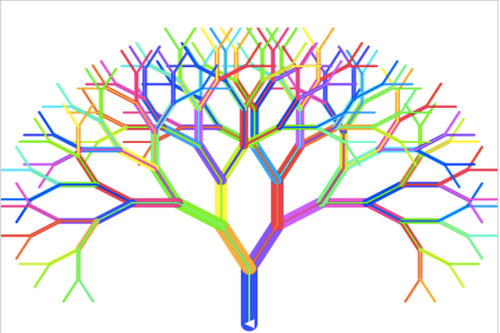

- easier to explain(even than linear reg)

- closely mirror human decison-making process

- displayed graphically and are easily interpreted even by a non-expert

- easily handle qualitative predictors even without making dummy variables

- trees predictive accuracy « linear models $\to$ improvement through bagging, random forests, and boosting

- non-robust(small change in the data can cause a large change in the final estimated tree)

8.2 Bagging, Random Forests, Boosting

8.2.1 Bagging

-

reduce the disadvantage of the high variance of decision trees

-

averaging a set of observations reduces variance ( $\sigma^2 \to \sigma^2/n$)

-

generate B different bootstrapped training data sets, and then train our method on the bth bootstrapped training set in order to get $\hat{f}^{*b}(x)$, and finallly average all the predictions, to obtain

$\hat{f}{bag}(x) = \frac{1}{B}\sum{b=1}^{B} \hat{f}^{*b}(x)$

-

the trees are deep, not pruned(each individual tree has high variance, but low bias. Averaging these B trees reduces the variance)

-

classification : a majority vote

-

the number of trees B is not critical parameter. a very large number of B will not lead to overfitting

Out-of-Bag Error Estimation

- instead of cross-validation

- on average, each bagged tree makes use of around two-thirds of the observations. The remaining one-third of the observations not used to fit a given bagged tree are referred to as the out-of-bag(OOB) observations.

- predict the response for the ith observation usin geach of the trees in which that observation was OOB. This will yield around B/3 predictions for ith observation

- OOB prediction can be obtained in this way for each of the n observation.

- regression : overall OOB MSE

- classification: OOB classication error

Variable Importance Measures

- hard to interpret than using a single tree

- an overall summary of the importance of each predictor using the RSS(regression) or GINI index(classification) decrease

8.2 Random Forests

- only use $m = \sqrt{p}$ predictors at each split

- why? the strong predictor make similar trees -> decorrelating the trees

- $m=p$ , same with bagging

- will not overfit if we increase B

8.2.3 Boosting

- boosting works simlarily as baggin, the difference is : the trees are grown sequentially

- each tree is grown using information from previously grown trees

- learn slowly

- fit a decision tree to the residuals from the model.

- fit a tree using the current residuals, rather than the outcom Y. then add this new decision tree into the fitted function in order to update the residuals.

- 3 parameters

- The number of trees B. boosting can overfit if B is too large. cross-validation to select B

- The shrinkage parameter $\lambda$, a small positive numver. the rate at which boosting learns. Typical values are 0.01 or 0.001. Very small $\lambda$ can require using a very large value of B

- The number $d$ of splits in each tree, which controls the complexity of the boosed ensemble. often $d=1$ works well, in shich case each tree is a stump, consisting of a ingle split. In this case, the boosted ensemble is fitting an additive model, since each term involves only a single variable. More generally $d$ is the interaction depth, and controls the interaction order of the boosted model, since d splits can involve at most d variables

- simple stumps with an interaction depth of one perform well if enough of them are included

- the growh of a particular tree takes into account the other trees that have already been grwon, smaller trees are typically sufficient.